Article series

This is the third of four articles describing different aspects of shipping Hilla apps to production:

- Teil 1: Production-Build

- Teil 2: Docker-Images

- Teil 3: CI/CD

- Teil 4: Serverless Deployment

Automation

CI/CD systems are an important part of modern software development. The continuous integration of code changes into the main branch is often used to build, statically analyse, automatically test, search for vulnerabilities and tag the current state of an application on the main branch. In this way, developers receive constant feedback on the status of the code on the main branch. The continuous delivery of the application to different environments, such as test or production, extends the feedback cycle with additional test scenarios and feedback from end users. Many activities along the continuous integration and continuous delivery process can be automated by CI/CD systems so they can be repeated as often and quickly as needed.

These advantages of CI/CD systems naturally also apply to the development of Hilla apps. It is therefore advisable to implement a suitable CI/CD system for the professional development of Hilla apps. There are many different ways to realize a CI/CD system, and the selection of the right tool depends on many different factors. For example, GitHub Actions is a very popular CI/CD system for organizations that have heavily focus their development on GitHub. Another example of a CD/CD system is Jenkins.

The following sections show a simple CI/CD system for a Hilla app based on OpenShift and Tekton.

CI/CD using OpenShift and Tekton

OpenShift is a platform for the operation of container-based applications. It is based on Kubernetes and is developed by Red Hat. Tekton is an Open Source framework for creating CI/CD systems. Tekton can be used as a CI/CD system in Kubernetes environments. In OpenShift, Tekton is used in the form of the OpenShift Pipelines Operator.

Tekton provides various resources, such as Pipelines, Tasks, Triggers and EventListener. The combination of these resources and their configuration takes place via Custom Resource Definitions (CRD) for Kubernetes in the form of YAML files.

A simple Tekton pipeline

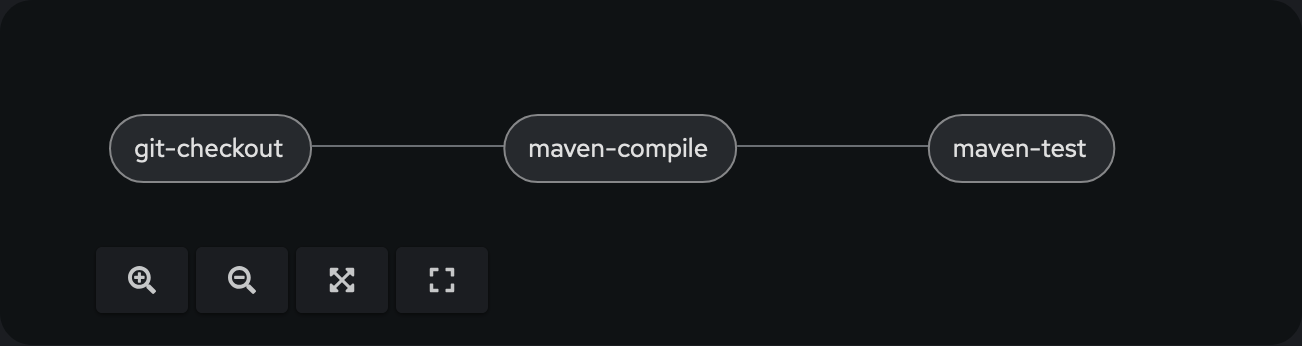

A simple Tekton pipeline for a Hilla app could consist of the following tasks:

- Check out code

- Compile app

- Execute tests

Each task can be implemented individually. Alternatively, existing tasks from the Tekton Hub or so-called Cluster Resolver can be used. The tasks to be used are executed in a pipeline. The following graphic shows the execution of 3 tasks in sequence in a pipeline:

The corresponding YAML file for this pipeline can look like this, for example:

apiVersion: tekton.dev/v1

kind: Pipeline

metadata:

name: hilla-production-build-pipeline

namespace: hilla

spec:

params:

- default: 'git@scm.example.com:projects/hilla-production-build.git'

name: repo

type: string

- default: master

name: branch

type: string

tasks:

- name: git-checkout

params:

- name: url

value: $(params.repo)

- name: revision

value: $(params.branch)

- name: subdirectory

value: $(context.pipelineRun.name)

taskRef:

kind: Task

name: git-clone

workspaces:

- name: output

workspace: shared-workspace

- name: maven-compile

params:

- name: MAVEN_IMAGE

value: 'maven:3-eclipse-temurin-21-alpine'

- name: CONTEXT_DIR

value: $(context.pipelineRun.name)

- name: GOALS

value:

- clean

- compile

runAfter:

- git-checkout

taskRef:

kind: Task

name: maven

workspaces:

- name: maven-settings

workspace: maven-settings

- name: source

workspace: shared-workspace

- name: maven-local-repo

workspace: maven-local-m2

- name: maven-test

params:

- name: MAVEN_IMAGE

value: 'maven:3-eclipse-temurin-21-alpine'

- name: CONTEXT_DIR

value: $(context.pipelineRun.name)

- name: GOALS

value:

- test

runAfter:

- maven-compile

taskRef:

kind: Task

name: maven

workspaces:

- name: maven-settings

workspace: maven-settings

- name: source

workspace: shared-workspace

- name: maven-local-repo

workspace: maven-local-m2

workspaces:

- name: shared-workspace

- name: maven-settings

- name: maven-local-m2

The git-checkout task uses git-clone. It clones a Git repo and checks out the desired branch into a workspace directory. This directory is available to all tasks within a pipeline execution (PipelineRun). After the checkout, tasks are executed with maven. This task provides an execution environment for Maven. First, the task is executed as maven-compile with the Maven goals clean compile in order to compile the application. Then the task is executed as maven-test to run the automated tests. Both tasks use the same workspace directory and therefore have access to the checked-out code.

Extension for production build

The pipeline shown can be extended flexibly with additional tasks. The creation of the production build of a Hilla app in the form of an executable JAR file can take place after the maven-test task in a new maven-package task:

- name: maven-package

taskRef:

name: maven

params:

- name: MAVEN_IMAGE

value: 'maven:3-eclipse-temurin-21-alpine'

- name: CONTEXT_DIR

value: $(context.pipelineRun.name)

- name: GOALS

value:

- -DskipTests

- package

- -Pproduction

workspaces:

- name: maven-settings

workspace: maven-settings

- name: source

workspace: shared-workspace

- name: maven-local-repo

workspace: maven-local-m2

runAfter:

- maven-test

Alternatively, the production build of the Hilla app can also be created as a Native Image. The already known maven task can also be used for this. However, the MAVEN_IMAGE parameter must use an image, which supports the creation of a Native Image using GraalVM:

- name: maven-package

taskRef:

name: maven

params:

- name: MAVEN_IMAGE

value: 'ghcr.io/graalvm/native-image-community:21.0.2'

- name: CONTEXT_DIR

value: $(context.pipelineRun.name)

- name: GOALS

value:

- -DskipTests

- package

- -Pproduction

- -Pnative

- native:compile

workspaces:

- name: maven-settings

workspace: maven-settings

- name: source

workspace: shared-workspace

- name: maven-local-repo

workspace: maven-local-m2

runAfter:

- maven-test

Extension for creating the Docker image

The next step is to extend the pipeline with a task that creates a Docker image based on the generated production build. OpenShift offers different approaches for this. The approach for building a Docker image based on a Dockerfile will be used here. Creating the required BuildConfig and starting the actual build is done in a separate task:

apiVersion: tekton.dev/v1

kind: Task

metadata:

name: build-docker-image

namespace: hilla

spec:

params:

- name: context-dir

type: string

steps:

- env:

- name: HOME

value: '/tekton/home'

- name: CONTEXT_DIR

value: $(params.context-dir)

- name: WORKSPACE_SOURCE_PATH

value: $(workspaces.source.path)

image: registry.redhat.io/openshift4/ose-cli-rhel9:v4.16

name: build-docker-image

computeResources:

requests:

cpu: 25m

memory: 128Mi

script: |

#!/usr/bin/env bash

set -eu

cd ${WORKSPACE_SOURCE_PATH}/${CONTEXT_DIR}

BUILD_NAME="hilla-production-build"

oc delete buildconfigs --selector=build=${BUILD_NAME}

oc delete imagestreams --selector=build=${BUILD_NAME}

oc new-build --binary=true --strategy=docker --name=${BUILD_NAME}

oc start-build ${BUILD_NAME} --from-dir=. --follow --wait

securityContext:

runAsNonRoot: true

runAsUser: 65532

workspaces:

- name: source

The task uses an Image, which contains the command line tool oc. At first, the task creates a new BuildConfig. To do this, the CLI oc is used with the command new-build. The parameter --binary=true results in a build that uses the generated production build as input. The created BuildConfig is then referenced with the command start-build to start the build. All files from the current directory are copied to the build process and are available for the creation of the Docker image.

The new build-docker-image task is added to the existing pipeline after the maven-package task. This ensures that both the required Dockerfile (from the checked-out repository) and the generated production build (from the previous task) are available in the shared workspace:

- name: build-docker-image

taskRef:

name: build-docker-image

params:

- name: context-dir

value: $(context.pipelineRun.name)

workspaces:

- name: source

workspace: shared-workspace

runAfter:

- maven-package

The build-docker-image task still has some shortcomings, of course. For example, there is currently no dynamic assignment of the name for a build, including suitable versioning. The version to be used can be read from the pom.xml. This can be done in a separate determine-version task:

apiVersion: tekton.dev/v1

kind: Task

metadata:

name: determine-version

namespace: hilla

spec:

params:

- name: context-dir

type: string

results:

- name: version

description: Value of project.version in pom.xml

steps:

- env:

- name: HOME

value: '/tekton/home'

- name: CONTEXT_DIR

value: $(params.context-dir)

- name: WORKSPACE_SOURCE_PATH

value: $(workspaces.source.path)

image: eclipse-temurin:21-jre

name: determine-version

computeResources:

requests:

cpu: 50m

memory: 256Mi

script: |

#!/usr/bin/env bash

set -eu

cd ${WORKSPACE_SOURCE_PATH}/${CONTEXT_DIR}

VERSION=$(./mvnw org.apache.maven.plugins:maven-help-plugin:3.5.0:evaluate -Dexpression=project.version -q -DforceStdout)

echo ${VERSION} | tee $(results.version.path)

securityContext:

runAsNonRoot: true

runAsUser: 65532

workspaces:

- name: source

The determined version is published as a result and can be used in subsequent tasks. The new task determine-version is therefore integrated in the pipeline after the task maven-package and before the task build-docker-image:

- name: determine-version

taskRef:

name: determine-version

params:

- name: context-dir

value: $(context.pipelineRun.name)

workspaces:

- name: source

workspace: shared-workspace

runAfter:

- maven-package

The task build-docker-image is then extended by the parameters build-name and build-version:

apiVersion: tekton.dev/v1

kind: Task

metadata:

name: build-docker-image

namespace: hilla

spec:

params:

- name: context-dir

type: string

- name: build-name

type: string

- name: build-version

type: string

steps:

- env:

- name: HOME

value: '/tekton/home'

- name: CONTEXT_DIR

value: $(params.context-dir)

- name: BUILD_NAME

value: $(params.build-name)

- name: BUILD_VERSION

value: $(params.build-version)

- name: WORKSPACE_SOURCE_PATH

value: $(workspaces.source.path)

image: registry.redhat.io/openshift4/ose-cli-rhel9:v4.16

name: build-docker-image

computeResources:

requests:

cpu: 25m

memory: 128Mi

script: |

#!/usr/bin/env bash

set -eu

cd ${WORKSPACE_SOURCE_PATH}/${CONTEXT_DIR}

oc delete buildconfigs --selector=build=${BUILD_NAME}

oc delete imagestreams --selector=build=${BUILD_NAME}

oc new-build --binary=true --strategy=docker --name=${BUILD_NAME}

oc start-build ${BUILD_NAME} --from-dir=. --follow --wait

securityContext:

runAsNonRoot: true

runAsUser: 65532

workspaces:

- name: source

When using the build-docker-image task in the pipeline, the two new parameters are set. The parameter build-name receives a project-specific value, and the parameter build-version receives the result of the task determine-version as a value:

- name: build-docker-image

taskRef:

name: build-docker-image

params:

- name: context-dir

value: $(context.pipelineRun.name)

- name: build-name

value: hilla-production-build

- name: build-version

value: $(tasks.determine-version.results.version)

workspaces:

- name: source

workspace: shared-workspace

runAfter:

- determine-version

Extension for publishing the Docker image

The Docker image created has to be published in a suitable container registry, of course. The configuration required for this can be passed to the new-build command. To do this, the task build-docker-image is renamed and extended to build-and-push-docker-image:

apiVersion: tekton.dev/v1

kind: Task

metadata:

name: build-and-push-docker-image

namespace: hilla

spec:

params:

- name: context-dir

type: string

- name: build-name

type: string

- name: build-version

type: string

steps:

- env:

- name: HOME

value: '/tekton/home'

- name: CONTEXT_DIR

value: $(params.context-dir)

- name: BUILD_NAME

value: $(params.build-name)

- name: BUILD_VERSION

value: $(params.build-version)

- name: WORKSPACE_SOURCE_PATH

value: $(workspaces.source.path)

image: registry.redhat.io/openshift4/ose-cli-rhel9:v4.16

name: build-and-push-docker-image

computeResources:

requests:

cpu: 25m

memory: 128Mi

script: |

#!/usr/bin/env bash

set -eu

cd ${WORKSPACE_SOURCE_PATH}/${CONTEXT_DIR}

oc delete buildconfigs --selector=build=${BUILD_NAME}

oc delete imagestreams --selector=build=${BUILD_NAME}

oc new-build --binary=true --strategy=docker --name=${BUILD_NAME} --to-docker=true --to=corporate.registry.example.com/${BUILD_NAME}:${BUILD_VERSION}

oc start-build ${BUILD_NAME} --from-dir=. --follow --wait

securityContext:

runAsNonRoot: true

runAsUser: 65532

workspaces:

- name: source

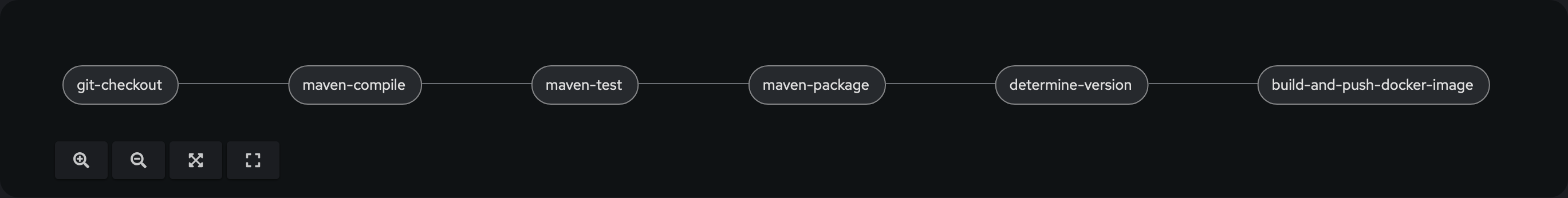

The additional parameters --to-docker and --to ensure that the Docker image created is then published with the name ${BUILD_NAME} and the tag ${BUILD_VERSION} to the Docker registry corporate.registry.example.com. The pipeline must now be updated so that it also uses the renamed task build-and-push-docker-image. The pipeline now has the following design:

Summary

Using the CI/CD system Tekton in OpenShift, tasks and a pipeline were used as an example to show how the creation of a production build of a Hilla app and the creation and publishing of a Docker image based on it can take place. The degree of automation achieved allows continuous delivery of the Hilla app in production without manual work steps.

Part 4 of the article series describes how a Hilla app, which is available as a production build in a Docker image, can be run as efficiently and scalably as possible as a serverless deployment in container environments.