Article series

This is the fourth of four articles describing different aspects of shipping Hilla apps to production:

- Teil 1: Production Build

- Teil 2: Docker Images

- Teil 3: CI/CD

- Teil 4: Serverless Deployment

Serverless Deployment

A production build of a Hilla app as an executable JAR file or as a Native Image is characterized by the following properties:

- As a Docker container, they can be deployed quickly and flexibly in container environments.

- As a Native Image or optimized JAR file, the Hilla app can be started very quickly.

- Especially as a Native Image, the Hilla app has a low memory consumption.

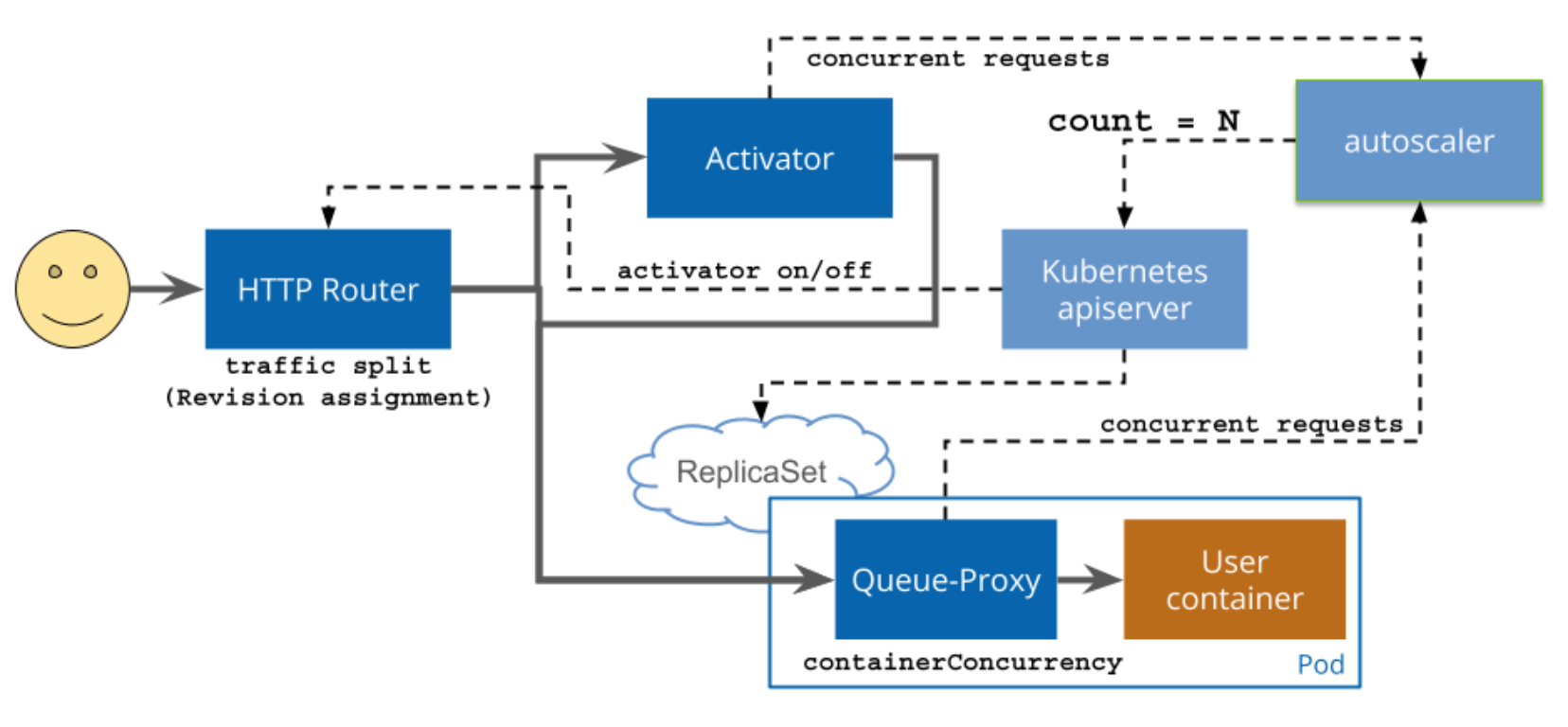

In addition, Hilla apps are stateless by default. All these properties contribute to the fact that Hilla apps can scale very well. In Kubernetes environments, a Horizontal Pod Autoscaler in conjunction with a regular Deployment can be used for this type of scaling, for example. Alternatively, a so-called Serverless deployment can also be used. When using this kind of deployment, the resources and configurations required for the deployment and scaling are further abstracted. This leads to a simplified and compact configuration. Knative is a framework that supports this type of deployments in Kubernetes environments using Knative Serving. The following image shows the flow of a request from a user to an application that is provided using Knative Serving:

Source: https://knative.dev/docs/serving/request-flow/

The Activator recognizes incoming requests, determines whether the application is currently running, and initiates the start if necessary. The Autoscaler checks whether the number of pods currently running for an application matches the number of requests. The Queue-Proxy buffers incoming requests until they can be forwarded to an available pod.

The following YAML file shows a configuration for a serverless deployment of a Hilla app whose production build is available as a Docker image:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hilla-production-build-serverless

spec:

template:

spec:

containers:

- image: corporate.registry.example.com/hilla-production-build:1.0.0

Scaling

When working with Knative, the so-called Knative Pod Autoscaler (KPA) can be used for scaling applications. A special function of the KPA is Scale to Zero. If this function is active, Knative can completely shut down the pods of an application if the application no longer has any incoming traffic within a configurable period of time. This function can help to save costs and energy when operating a Hilla app and to utilize a Kubernetes cluster more efficiently.

If the application receives a large or volatile amount of traffic, the KPA can of course also scale the application up or down and automatically start additional pods or terminate pods that are no longer required. In order for the KPA to perform this automatic scaling of an application, the configuration shown above must be extended. A very simple form of scaling can be achieved using the containerConcurrency property:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hilla-production-build-serverless

spec:

template:

spec:

containerConcurrency: 300

containers:

- image: corporate.registry.example.com/hilla-production-build:1.0.0

This property controls the maximum number of incoming requests a pod can process before another pod is started. As an alternative or in addition to this hard limit, scaling can also be configured based on the utilization of individual pods. Various metrics can be used for this. The KPA supports concurrency and rps (requests per seconds) here. If you want to adjust the scaling to the CPU or memory utilization, you can also use Knative in conjunction with the Horizontal Pod Autoscaler (HPA), but then you cannot use the Scale-to-Zero functionality (see Supported Autoscaler types). The following configuration uses the metric concurrency with a target value:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hilla-production-build-serverless

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/metric: "concurrency"

autoscaling.knative.dev/target: "300"

spec:

containers:

- image: corporate.registry.example.com/hilla-production-build:1.0.0

This configuration describes a soft limit of max. 300 simultaneous connections to a pod. This limit can potentially be exceeded during short-term peaks.

Liveness and Readiness Probes

A useful extension to the deployment configuration shown above is the addition of a liveness and readiness probe. With the help of these probes, Knative can recognize whether a pod has been started successfully and whether a pod is still running correctly. This information is important for Knative to be able to start and run the required number of pods needed for scaling based on the configuration.

As Hilla apps are based on Spring Boot, the required endpoints for the liveness and readiness probes can be implemented using the Actuator project. To do this, Actuator must first be added as an additional dependency in the pom.xml:

<!-- ... -->

<dependencies>

<!-- ... -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!-- ... -->

</dependencies>

<!-- ... -->

The appropriate Health endpoint can then be activated in the application.properties:

# Disable all default endpoints

management.endpoints.enabled-by-default=false

# Enable health endpoint

management.endpoint.health.enabled=true

# Probes are automatically enabled if the app runs in a Kubernetes environment, or manually like this

management.endpoint.health.probes.enabled=true

For liveness and readiness probes, special endpoints are available below the health endpoint.

A GET request to the path /actuator/health/liveness of the Hilla app calls the liveness endpoint. From Spring Boot Actuator’s point of view, an application is considered live as soon as the ApplicationContext has been started and refreshed. In this case, the following response with status code 200 is received:

{ "status" : "UP" }

If the application is not yet live or is in a faulty state, you will receive a response with the status code 503:

{ "status" : "DOWN" }

A GET request to the path /actuator/health/readiness of the Hilla app calls the readiness endpoint. From Spring Boot Actuator’s point of view, an application is considered ready as soon as it is able to receive and process incoming requests. In this case, the following response with status code 200 is received:

{ "status" : "UP" }

If the application is not yet able to receive and process incoming requests, you will receive a response with status code 503:

{ "status" : "OUT_OF_SERVICE" }

This can happen, for example, if the application is already live, but tasks are still being executed by the Spring Boot CommandLineRunner or ApplicationRunner as part of the start process.

In addition to these standard indicators, individual health indicators can also be implemented if required.

For the readiness and liveness probes of Knative, the health endpoint can be used as follows:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hilla-production-build-serverless

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/metric: "concurrency"

autoscaling.knative.dev/target: "300"

spec:

containers:

- image: corporate.registry.example.com/hilla-production-build:1.0.0

readinessProbe:

httpGet:

path: /health/readiness

port: 8080

scheme: HTTP

periodSeconds: 5

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

path: /health/liveness

port: 8080

scheme: HTTP

periodSeconds: 5

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 6

The readiness probe checks whether the health endpoint responds with the status UP and the status code 200 within 1 second (timeoutSeconds) over a period of 5 seconds (periodSeconds) after starting the pod. This test is repeated a maximum of 3 times (failureThreshold) and thus finally fails after a maximum of 15 seconds. The liveness test performs the same test continuously after the pod has reached the status Ready. In contrast to the readiness test, a higher failureThreshold is configured for the liveness test. If the health endpoint does not respond once within 1 second with the status UP and the status code 200 for a maximum period of 30 seconds, the pod is terminated because it can be assumed that the application is no longer running correctly.

Summary

Serverless deployments with scale-to-zero functionality enable efficient and scalable operation of applications in container environments. Knative Serving offers a simple way of configuring this kind of deployments. Stateless Hilla apps are ideal for this type of deployment, especially as a production build in the form of an executable JAR file or as a Native Image.